AI IDEs Need Moats

VS Code eliminated the switching cost for AI IDEs. They need to build moats to survive. Partnering with software vendors and new open source projects could help.

Martin Kleppmann and I gave a keynote interview for the Monster SCALE conference today. I always enjoy ScyllaDB’s conferences and this one was no exception. Check out our interview here. While you’re at it, watch Almog Gavra’s SlateDB talk, too!

I’ve avoided talking about AI on my newsletter thus far. The space is moving so rapidly. It feels futile to try and keep up, and anything written becomes stale very quickly. Still, I have been using AI daily for the past few years, primarily for coding. First with ChatGPT, then with Windsurf. I’m going to break my embargo for this post to cover some recent discussions I’ve had about AI IDEs like Windsurf and Cursor.

For the non-developers out there, Windsurf is an AI-powered agentic integrated developer environment (IDE) akin to Cursor. In layman’s terms, these are application that developers use to write code along side an AI. Both Windsurf and Cursor are built on Visual Studio Code, and both are quite effective. I primarily use Windsurf’s AI features when writing unit tests and small scripts, be it Python, Bash, or Github Actions YAML. A few weeks back, I decided to check in and see if I should switch to Cursor.

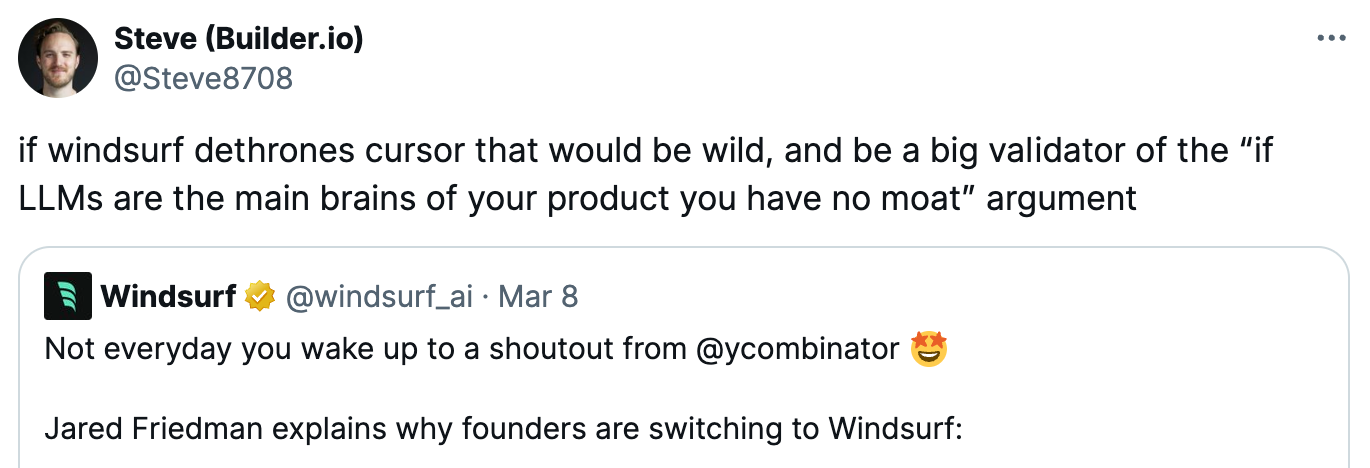

There was no consensus. My impression from the comments was that Windsurf was slightly better than Cursor. I decided to stay with Windsurf since I was already using it. Fast forward a few weeks, and this post on Twitter caught my eye:

Tom’s post validates my impression, but it also raises an interesting question about moats. As Tom says, since both Windsurf and Cursor are forks of VS Code with nearly identical interfaces, the switching cost is nearly zero. When I switched from VS Code to Windsurf, it took all of 30 minutes. I haven’t looked back. Switching from Windsurf to Cursor, I’m confident, would be the same.

It’s easy to think these IDEs are destined to be commodities. I don’t think so. The fact that Windsurf is unseating Cursor right now is a signal that, even with commodity LLMs, Windsurf is offering a better product than Cursor. It does make the space highly competitive, however. As Steve’s post says, the IDEs have to be more than API calls to OpenAI and Anthropic. What might this look like?

Last week, Josh Wills and I were discussing AI IDEs over coffee (he’s hiring at DatologyAI, by the way!). Josh made an off-hand comment that LLMs really struggle with dataframe libraries that aren’t Pandas; they hallucinate and assume you’re using Pandas. This resonated with me. I had the exact same experience when trying to learn Zig in 2023. The language was very new, and similar enough to Go and Python that ChatGPT would intermingle the three languages together.

This dynamic is interesting: LLMs struggle with new infrastructure and tools. The same is true for proprietary software—both internal company code and closed source vendor software. There isn’t enough public data on the internet to train the LLMs on such software.

A poor LLM experience with new and proprietary software makes for an interesting virtuous cycle. Developers get a better experience working with legacy software that has thousands of stack overflow questions and github repositories. A better experience increases the adoption cost for new software (or decreases the cost of using legacy software). Developers will stick with the existing software, which will give the AI IDEs and LLM models yet more data to train on. This, in turn, will further improve the developer experience for the existing software.

Proprietary vendors and new open source projects need to figure out how to break this cycle. Offering software that is far better than incumbents could justify the increased switching cost. Vendors could also build their own plugins that help with prompt engineering and retrieval-augmented generation (RAG). Anthropic’s Model Context Protocol (MCP), llms.txt, and agents.json standard might also be adopted. Indeed, Nile ﹩ announced an MCP server for its product as I write this post.

AI IDEs like Windsurf and Cursor seem well positioned to help with the adoption problem. IDE companies could partner with vendors to integrate tightly into the IDE’s agents. The companies might also help with fine-tuned models, smaller LLMs purpose-built on curated data, custom integrations, VS Code plugins, or even preferred placement in the IDE—all for specific languages, tools, and vendors. A partnership between Windsurf and a company bringing a new software product to market would be symbiotic. Windsurf would build a better (more sticky) IDE while the company it partners with would gain a better developer experience. Windsurf might even be able to charge for such services.

AI IDE companies could extend this work to internal company software as well. This is where Sourcegraph (the company that makes Cody) got its start. Internal company codebases are not typically publicly available, so LLMs know less about them. Both Cursor and Windsurf are already selling into the enterprise; they should be able to offer a wide range of tools for this use case.

And then there is Github. In the Bluesky thread above, Qian Li pointed out that Github already has access to many proprietary (private) repositories. They also offer Copilot. They, too, seemed well positioned to compete as an AI IDE. I’m less confident in their ability to execute, however. Copilot has lagged behind its competitors despite strong competitive advantages.

So yes, AI IDEs are a very competitive space, but I believe they’ll develop moats around specialized-models, partnerships, proprietary training data, and more.

Book

Support this newsletter by purchasing The Missing README: A Guide for the New Software Engineer for yourself or gifting it to someone.

Disclaimer

I occasionally invest in infrastructure startups. Companies that I’ve invested in are marked with a ﹩ in this newsletter. See my LinkedIn profile and Materialized View Capital for a complete list.