Nimble and Lance: The Parquet Killers

Everything you need to know about the new AI/ML storage formats from Meta and LanceDB.

Apache Parquet has been the de facto columnar data format on object storage for some time. Nearly every cloud data warehouse and data lake integrates with Parquet. But developers are recognizing its limits. Recently, two new formats were open sourced—one from Meta and one from LanceDB. In this post, I’ll dig into Nimble and Lance V2 (LV2) and give some thoughts.

Storage Format Primer

(You can skip this section if you know what a columnar file format is.)

Before I discuss Nimble and LV2, you need to know how a columnar storage format works. Meta’s Nimble YouTube video from VeloxCon 2024 does a good job summarizing, so I’ll borrow from it.

Row-based formats write data to disk as a sequence of row bytes. Consider a database table with a user_id and features column. When a new row is written, it’s stored as a sequence of bytes representing user_id followed by a sequence of bytes representing features.

Each red block on the right represents one row written to disk. Each row contains bytes for each column, one after the next (represented by green and orange boxes). Columnar file formats invert this sequence. Bytes are grouped by column not row.

Columnar formats are useful for data warehouse-style queries that typically do aggregation on a few columns but a large number of rows (e.g. SELECT state, SUM(price) FROM sales GROUP BY 1). In a row-based format, you’d have to read every row just to use one or two columns. With a column-based format, readers can read only the column bytes they need and take advantage of sequential IO performance gains.

Traditional columnar-based formats require the writer to buffer all data before they can write it. The writer needs to know all of the user_ids before it can write the red block in the image above—a substantial burden

Parquet (and ORC, a similar but less popular file format) solve this problem by introducing the idea of row groups (or ORC’s synonymous stripes). Row groups combine the row-based and column-based approach. The idea is to partition the data up into smaller groups of rows so that a writer can store just that set of rows in a columnar format. Because the partitions are relatively small, the writer doesn’t need to buffer the entire data set before writing the columnar data for that row group.

The example above illustrates a table that’s been divided into two row groups (orange and green). Each row group contains a sequence of columns (represented by the blue and red blocks).

Pages are represented as green blocks in the image—chunks of each column. Pages are used so a reader needn’t read all column data for a row group; they can read only a subset of the data if it’s sorted in the same manner as their query. All of these tricks are just ways to partition the table data up to make it friendly for data warehouse-style queries.

Parquet’s Problems

Parquet is an incredibly well done storage format. It has enabled composable database systems and provided an integration format for data lakes and cloud data warehouses. But it’s begun to show its age. LanceDB’s Lance v2 post summarizes the issues well:

Point lookups: Reading a single row requires reading an entire page, which is expensive.

Large values: Columns with large values make the row group size hard to tune. If the row groups are small, only a small number of rows will be included in each page (since the large values will take most of the page). If a row group is large, writes become memory intensive.

Large schemas: Some datasets have 1000s of columns. Parquet requires readers to load the schema metadata for all columns to read just one—a costly and wasteful operation.

Limited encodings: There have been an explosion of encoding styles for data of different shapes and sizes. These new encoders do a better job of compacting and compressing data in different scenarios. Parquet provides only a fixed set of dated encoders.

Limited metadata: Parquet only allows encoders to store metadata—statistics, min, max, nulls, and more—in pages. Some encoders would benefit from storing metadata at the row grow, stripe, or file level.

These problems are particularly acute for machine-learning (ML) and artificial intelligence (AI) style uses. Machine learning data often has columns with a long list of floats, as the features column in the screenshots above illustrates. AI use-cases often need text, images, or video near their feature vectors for training and retrieval-augmented generation (RAG) use cases. Parquet struggles with such use cases. Nimble and LV2 address these issues.

Nimble and LV2

Nimble takes an incremental approach to Parquet’s problems. The format handles wide schemas using FlatBuffers rather than Protobuf to decode only the metadata bytes that are actually used. Nimble’s encoding layer is extensible; new formats can be added by the user. Metadata is also made extensible by treating it as part of the encoding payload rather than a rigid schema in the file footer. While stripes (row groups) still exist, their footers have been moved to the end of the file.

LV2 is far more radical. Parquet’s row groups have been completely removed. LV2 files are simply a collection of data pages, column metadata, and a footer—that’s it. In the name of extensibility, LV2 has no type system and no built-in encodings. Both are pluggable. LV2 is so thin, the whole spec is a mere ~200 line Protobuf file.

Extensibility Is a Double Edged Sword

Engineers love to make things extensible. It’s an escape hatch for bad decisions, the promise of flexibility, and an openness to new ideas. But extensibility causes fragmentation.

Each library must implement a wide range of encodings. A library that doesn’t support an encoding will not be able to read or write its data. Users will see read errors. This is a frustrating user experience.

In an enterprise, this is a common pitfall. Team A encodes a column with some fancy new encoding that suits their use case. Team B wants to read the data but can’t because their library (probably written in another language) doesn’t support the encoding.

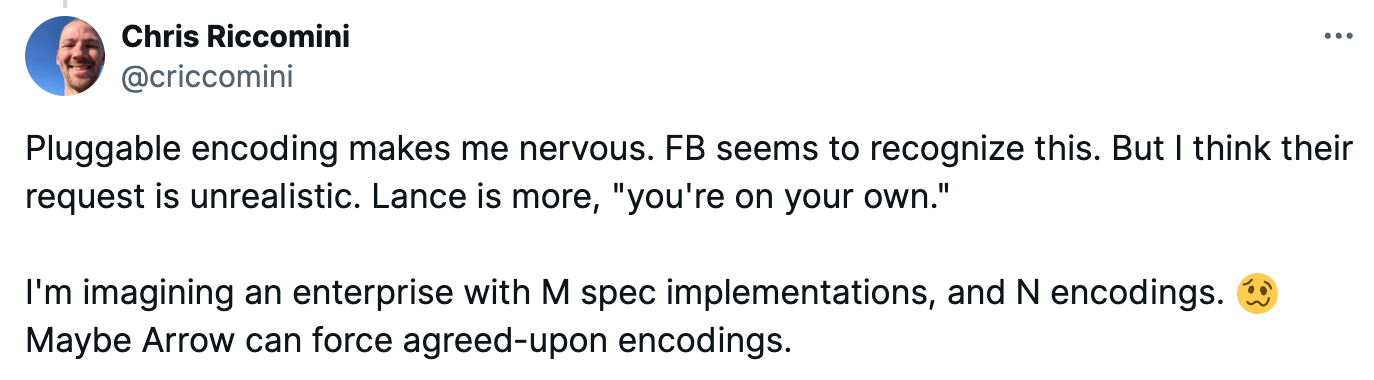

Meta is pleading with users—please don’t build more implementations. (Emphasis added.)

More than a specification, Nimble is a product. We strongly discourage developers to (re-)implement Nimble’s spec to prevent environmental fragmentation issues observed with similar projects in the past. We encourage developers to leverage the single unified Nimble library, and create high-quality bindings to other languages as needed.

They’re dreaming. It’s not written in Rust, so that’s going to happen. There will be others, too. Engineers just can’t resist reimplementing specs in their native languages.

Lance is more laissez-faire:

If a user tries to read a file written with this encoding, and their reader does not support it, then they will receive a helpful error “this file uses encoding X and the reader has not been configured with a decoder”. They can then figure out how to install the decoder (or implement it if need be). All of this happens without any change to the Lance format itself.

And we’re just talking about encodings. LV2’s choice to ditch both encodings and type systems really freaked me out. I spent a bunch of time with type systems when working on Recap. It’s really hard to get types right.

This is hell for data engineers. At least, that was my first reaction. I’ve since realized that LV2 has adopted an unstated philosophy: Arrow will dictate the standard.

LV2 has already implemented Arrow’s types (Schema.fbs) as its first type system. This is an excellent choice. Likewise, the encodings that Arrow supports will dictate what other implementations must adopt. I really like this.

To Stripe or Not to Stripe

It’s not totally clear to me whether LV2’s decision to ditch stripes is a net win or not. Column I/O is not sequential in LV2. Reads can be scattered across pages all over the file. LanceDB’s Weston Pace had a reasonable response when I asked about this:

Pages should be large enough that there is no real advantage to having them sequential. E.g. 10 random 4MB reads has same perf as 10 sequential 4MB reads. This should be true for both disk and cloud. Also, LV2 favors larger pages (compression chunk size is independent)

This led to a very interesting exchange between Weston and WarpStream’s [$] co-founder, Ryan Worl. Ryan, too, is worried that non-sequential pages will require more reads.

Both engineers know what they’re talking about. Tradeoffs are always tough. I suspect LV2 has opted for tuning that works better for ML and AI use cases. I’m interested to see how these formats perform against more traditional analytical queries—large scans on a few columns that span many pages.

EDIT: LanceDB’s Weston Pace has published Columnar File Readers in Depth: Parallelism without Row Groups, which further discusses their choice to abandon row groups.

It’s Early Days

Nimble and Lance are both young. They’ve chosen to focus on ML and AI workloads first. Parquet is weak for these use cases, and there’s a real need for a better format. But the ultimate goal for both formats is to replace Parquet for online analytical processing (OLAP)-style queries, too. Chang She, LanceDB’s CEO, tells me:

Don’t want to put words in Nimble’s mouth but Lance has so far been very focused on vectors / images / videos + indexing. Basically OLAP + Search + Training. AFAICT that’s not the stated focus for Nimble.

Nimble’s Github says:

Nimble is meant to be a replacement for file formats such as Apache Parquet and ORC.

Neither format is close to this yet. Nimble is missing predicate pushdown—a key feature for analytical queries. LV2 has only basic encoding support. The performance numbers in Meta’s talk show a machine-learning use case executing twice as fast on Nimble. Given the favorable workload, one wonders how an OLAP workload would look. Probably not great right now. Lance includes some numbers in their (somewhat dated) CMU presentation:

Given all this, it’s obvious that the formats are not ready for prime-time yet. Most analytical use cases are still best suited for Parquet. That’s totally fine—it’s expected. These are young projects. I’m bullish on both, but particularly LV2. It’s a radical shift, but its integration with Arrow and thoughtful design make it really compelling to me.

Book

Support this newsletter by purchasing The Missing README: A Guide for the New Software Engineer for yourself or gifting it to someone.

Disclaimer

I occasionally invest in infrastructure startups. Companies that I’ve invested in are marked with a [$] in this newsletter. See my LinkedIn profile for a complete list.