S3 Is Showing Its Age

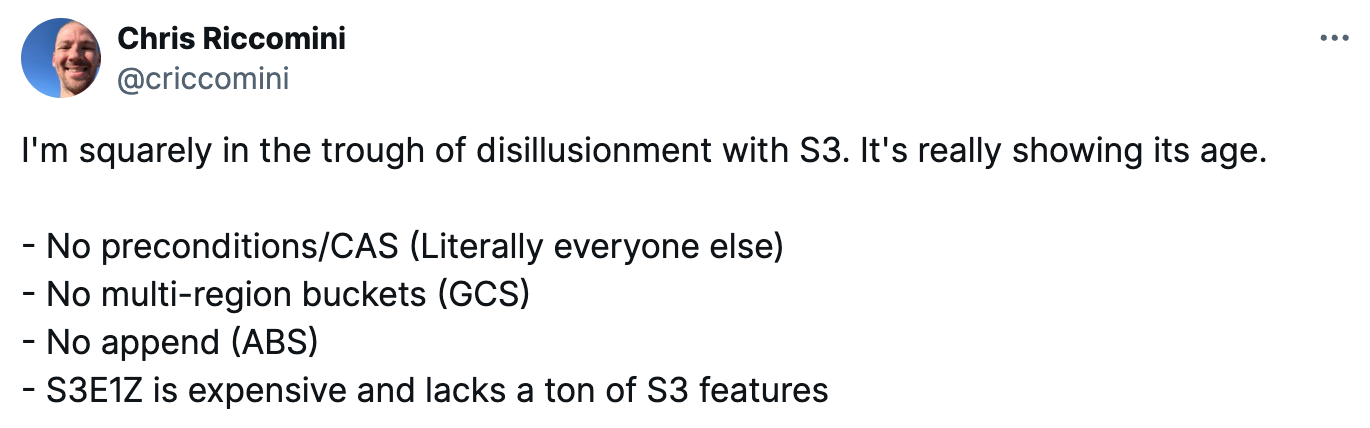

I'm squarely in the trough of disillusionment with S3.

There’s no denying that S3 is a feat of engineering. Building and Operating a Pretty Big Storage System is a top-tier technology flex. But S3’s feature set is falling behind its competitors. Notably, S3 has no compare-and-swap (CAS) operation—something every single other competitor has. It also lacks multi-region buckets and object appends. Even S3 Express is proving to be lackluster.

These missing features haven’t mattered much for data lakes and offline use cases. But new infrastructure is using object storage as their primary persistence layer—something I’m excited about. Here, S3’s feature gaps are a bigger problem.

Missing Preconditions

Preconditions (also known as compare-and-swap (CAS), conditionals, If-None-Match, If-Match, and so on) allow a client to write an object only if a certain condition is met. A client might wish to write an object only if it doesn’t exist, or update an object only if it hasn’t changed since the client last read it. CAS makes this possible. Such operations are used often for locks and transactions in distributed systems.

S3 is the only object store that doesn’t support preconditions. Every other object store—Google Cloud Storage (GCS), Azure Blob Store (ABS), Cloudflare Ridiculously Reliable (R2) storage, Tigris, MinIO—all have this feature.

Instead, developers are forced to use a separate transactional store such as DynamoDB to enforce transactional operations. Building a two-phase write between DynamoDB and S3 is not technically difficult, but it’s annoying and leads to ugly abstractions.

S3 Express One Zone Isn’t S3

I was really excited about S3 Express One Zone (S3E1Z) when it first came out. The more time I spend with it, the less impressed I am. The first wart that surfaced was a new directory bucket type, which Amazon introduced for Express.

But the gaps don’t stop here. S3E1Z is missing a ton of standard S3 features including object version support, bucket tags, object locks, object tags, and MD5 checksum ETags. The complete list is pretty staggering.

S3E1Z buckets can’t be treated like a normal S3 buckets. As with CAS operations, developers must design around these deficiencies. And because S3E1Z is not multi-zonal, developers are left to build quorum writes to multiple availability zones for higher availability.

Factor in S3E1Z’s high storage cost of $0.16/gig—twice elastic block store’s (EBS) general purpose SSD (gp3) cost—and S3E1Z looks more like an expensive EBS with a half-implemented S3 API.

No Dual-Region/Multi-Region Buckets

S3’s availability feature gaps go beyond S3E1Z. S3 doesn’t have dual-region or multi-region buckets. Such buckets are useful for higher availability. Google offers a wide range of options in this area.

While not a must-have, higher availability buckets are certainly nice to have.

Embracing Reality

The dream is that developers are offered an object store with all of these features: low latency, preconditions, dual-region/multi-region, and so on. But we live in reality, where engineers face a choice: abandon S3 or build around these gaps.

Turbopuffer is my favorite example of a company that’s gone all-in on abandoning S3.

The gamble they’ve made is that S3 will eventually get preconditions. This gamble seems reasonable since Amazon has all of the required building blocks (DynamoDB and S3) and every competitor is beating them here. I’m making the same gamble with the cloud native LSM I’m working on.

The challenge with this approach is inter-cloud network cost. All cloud providers charge for network egress. Data transferred to infrastructure running outside amazon web services (AWS) will incur network egress fees. But the inter-cloud cost for AWS users isn’t as bad as you might think. Simon Eskildsen, Turbopuffer’s founder and CEO, has written about this extensively.

The benefit of this is that Turbopuffer has built a beautiful and minimalist design with just three components: a Turbopuffer binary, a RAM/SSD cache, and Google Cloud Storage.

For many, this will seem extreme. The alternative is to house metadata in a transactional store outside of S3.

Once you open yourself up to a separate metadata plane, you’ll find other use cases for it. The path to the slope of enlightenment is to recognize that S3 is an object store not a file system. By embracing DynamoDB as your metadata layer, systems stand to gain a lot.

Ultimately, the decision to abandon S3 or embrace its shortcomings depends on a system’s use cases and design goals. Preconditions on S3 and a unified S3E1Z API would make this decision a lot easier, though.

Book

Support this newsletter by purchasing The Missing README: A Guide for the New Software Engineer for yourself or gifting it to someone.

Disclaimer

I occasionally invest in infrastructure startups. Companies that I’ve invested in are marked with a [$] in this newsletter. See my LinkedIn profile for a complete list.